Seoultech IXLab

The SEOULTECH Interaction Lab (IXLAB) explores the future of human-computer synergy through AI-infused interactive computing. We focus on designing intelligent, adaptive systems that understand complex human behaviors and contexts via multimodal sensing. By bridging adaptive intelligence with immersive environments, we strive to create purposeful interaction paradigms that empower individuals in an increasingly automated and augmented world.

Latest News

| Aug 05, 2025 | 📄 One paper accepted to UIST 2025 Poster track! |

|---|---|

| Apr 18, 2025 | 🏆 Received Best Paper Honorable Mention for CHI2025 paper! |

| Apr 18, 2025 | 🏆 Received Best Paper Award for IUI2025 paper! |

| Feb 24, 2025 | 📄 One paper accepted to 2025 CHI LBW! |

| Feb 03, 2025 | 📄 Two papers accepted to ACM CHI 2025! |

| Feb 03, 2025 | 📄 One paper accepted to ACM IUI 2025! |

| Oct 01, 2024 | 📄 One paper accepted to SIGGRAPH ASIA 2024 as poster! |

| Aug 02, 2024 | 📄 One paper accepted to ISMAR 2024! |

| Apr 30, 2024 | 📄 Our paper accepted to IJHCI! (IF:4.7, 21.9%) |

| Apr 30, 2024 | 📄 Our paper accepted to EAIT! (IF:5.5, 6.9%) |

| Apr 01, 2024 | 💰 New research project funded by WISET! |

| Mar 02, 2024 | 📄 One paper accepted to CHI 2024 LBW! |

Research Topics

Immersive Spatial Computing

We investigate the intersection of spatial usability and hardware mediation to optimize user immersion and interaction within AR, VR, and MR environments. By bridging the gap between physical and virtual worlds, we aim to design intuitive interaction paradigms for mixed reality workspaces and enhance cognitive augmentation through spatially-aware interfaces.

Selected publications:

- [CHI '25] Through the Looking Glass, and What We Found There: A Comprehensive Study of User Experiences with Pass-Through Devices in Everyday Activities

- [IUI '25] A picture is worth a thousand words? Investigating the Impact of Image Aids in AR on Memory Recall for Everyday Tasks

- [CHI '25] Understanding User Behavior in Window Selection using Dragging for Multiple Targets

Intelligence-Augmented & Generative Interaction

We leverage Generative AI and Large Language Models (LLMs) to create adaptive, personalized content and intelligent interfaces that expand the boundaries of human creativity. We study how AI can act as a collaborative partner in creative tasks, ensure safe and appropriate content generation, and deliver context-aware information in spatial computing environments.

Selected publications:

- [IJHCS '26] SnapSound: Empowering everyone to customize sound experience with Generative AI

- [PeerJ CS '24] Mitigating Inappropriate Concepts in Text-to-Image Generation with Attention-guided Image Editing

- [ISMAR '24] Public Speaking Q&A Practice with LLM-Generated Personas in Virtual Reality

- [SIGGRAPH Asia '24] Designing LLM Response Layouts for XR Workspaces in Vehicles

- [CHI '23] Tingle Just for You: A Preliminary Study of AI-based Customized ASMR Content Generation

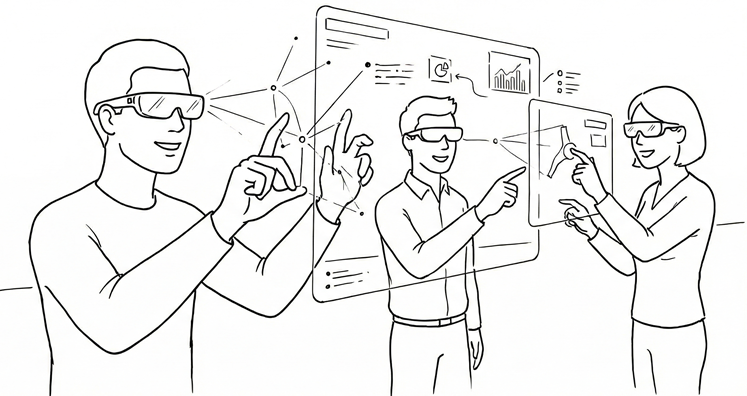

Multimodal Sensing Interfaces

We explore innovative input modalities to enable seamless and discreet communication in diverse social and digital contexts. Our work focuses on developing novel sensing techniques that interpret silent speech, non-verbal sounds, and subtle gestures to facilitate natural interaction in both virtual and physical spaces.

Selected publications:

- [UIST '25] Silent Yet Expressive: Toward Seamless VR Communication through Emotion-aware Silent Speech Interfaces

- [CHI '25] Exploring Emotion Expression Through Silent Speech Interface in Public VR/MR

- [EAIT '25] Enhancing Learner Experience with Instructor Cues in Video Lectures: A Comprehensive Exploration and Design Discovery toward A Novel Gaze Visualization

- [IJHCI '24] EchoTap: Non-verbal Sound Interaction with Knock and Tap Gestures

- [ICMI '21] Knock & Tap: Classification and Localization of Knock and Tap Gestures using Deep Sound Transfer Learning

Location

Frontier Hall 319-2, Seoul National University of Science and Technology

Sponsors & Partners

Research collaborators and funding agencies.